The LLM wall, Chime's IPO and is it robotaxi week?

Welcome to Cautious Optimism, a newsletter on tech, business, and power.

Happy Monday! There’s a lot to get to today, but keep an eye on the situation in LA. Rhetoric from the White House is ramping up, describing inverse-fairy-tale realities of the city’s overall safety while gunning for more federal intervention. The greater the perceived unrest and danger, the easier it is for the Federal government to further claim the right to inflame tensions.

Today, we’re looking at the reported Meta-Scale deal, Apple’s AI paper, and the week’s upcoming tech events of note, including Tesla’s robotaxi launch and Chime’s IPO. To work! — Alex

📈 Trending Up: Hitting the wrong target … AI cheating suppression … Indian exports … medical accuracy … actual contrarianism … consumer AI startup growth … UK AI … purges … tariff pain …

📉 Trending Down: Prices, in China … one-sided detente … Vandersloot’s 2025 availability … peace … venture investing pace … the Russian air force … China-US trade … Robinhood and the S&P500 …

Meta wants a piece of Scale

Bloomberg writes that Meta is considering a massive investment into Scale AI, the data labeling and AI evaluation company that was last valued at $14 billion by private investors. The total investment per the report could reach $10 billion.

Both the ‘berg and The Information have reported that Scale AI could reach a $25 billion valuation in an upcoming tender.

Precisely why Meta would want to own such a large implied stake in Scale AI is not immediately clear, apart from the chance that the transaction could become a lucrative investment in time. With $870 million in revenue last year, Scale is a large startup and one that could continue to scale — eventually listing in what could prove a blockbuster IPO.

Perhaps Meta has decided it needs more juice to stay competitive in the AI game. Its Llama 4 family of models have thus far have failed to lead the market, so help would be welcome. Or maybe the social giants simply needs help with its own data work. Or it needs a customer for its compute buildout. It’s hard to say, but seeing Meta potentially write a check for eleven-figures is so large as a single piece of news that we need to bear it in mind.

Have a hypothesis for why Meta wants a big piece of Scale? Hit reply, let us know. Best answer gets a hug.

The Apple AI paper vs. AI progress

For at least the second time this year, there’s new reason to doubt the current AI boom. Earlier in 2025, the arrival of DeepSeek’s R1 model shook the foundations of many a billion-dollar bet by offering up strong performance for less. Because DeepSeek’s model had been so much cheaper to build, the thinking went, the project to build out future-scale data centers could become a worldwide flop.

Since then, we’ve seen token generation scale incredibly rapidly from major cloud provides, AI models improve, and the leaderboard showing which companies are in the lead swap pinnacles. In other words, despite the shock, things are progressing about as expected sans the DeepSeek-generated, and now seemingly temporary, freakout.

Enter Apple, whose recent paper casts doubt on the thinking abilities of so-called ‘reasoning’ AI models, those that use inference-time compute to answer user prompts. The main thrust of the argument is easy to grok from the paper’s abstract:

Through extensive experimentation across diverse puzzles, we show that frontier [large reasoning models] face a complete accuracy collapse beyond certain complexities. Moreover, they exhibit a counterintuitive scaling limit: their reasoning effort increases with problem complexity up to a point, then declines despite having an adequate token budget.

The paper does not say that reasoning models are no more capable than their non-reasoning siblings. But it does show that when certain problems have their complexity raised, both reasoning and non-reasoning models both quickly fail in their ability to make any progress against a given task.

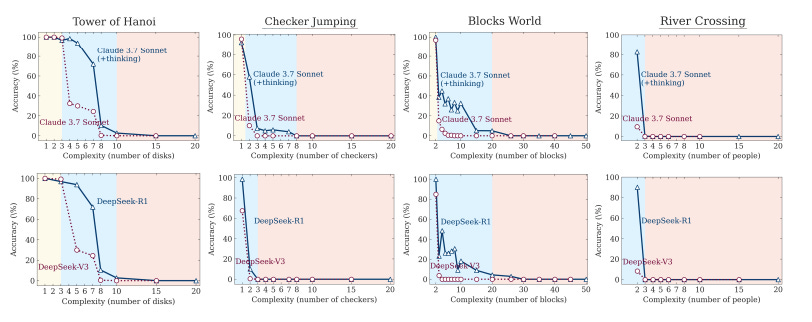

You can see the ‘collapse’ Apple mentioned in the following set of charts, which show the ability — or lack thereof — of AI models from Anthropic and DeepSeek to solve increasingly complicated puzzles:

As you can see, when given the Tower of Hanoi puzzle, both Anthropic and DeepSeek’s ‘thinking’ models did a bit better than their non-thinking peers. However, after a certain point in additive complexity, both types of AI models went to zero.

This implies, Apple writes, “a fundamental scaling limitation in the thinking capabilities of current reasoning models relative to problem complexity.” Turning to the experts, AI researcher and entrepreneur Gary Marcus dug into the Apple paper, writing the following:

The new Apple paper adds to the force [LLM critics] by showing that even the latest of these new-fangled “reasoning models” still —even having scaled beyond o1 — fail to reason beyond the distribution reliably, on a whole bunch of classic problems, like the Tower of Hanoi. For anyone hoping that “reasoning” or “inference time compute” would get LLMs back on track, and take away the pain of m mutiple failures at getting pure scaling to yield something worthy of the name GPT-5, this is bad news.

Yes, it’s not good that current SOTA LLMs have material limitations. It would be better if they did not, from an industry perspective at it would imply greater progress towards AGI.

Even worse, Apple uncovered a few other flaws in current LLMs that matter:

“We observe that reasoning models initially increase their thinking tokens proportionally with problem complexity. However, upon approaching a critical threshold—which closely corresponds to their accuracy collapse point—models counterintuitively begin to reduce their reasoning effort despite increasing problem difficulty.”

It’s not good that LLMs appear to give up past a certain complexity point. That implies a cap today on their abilities, and an issue that will have to be solved.

“[E]ven when we provide the algorithm in the prompt—so that the model only needs to execute the prescribed steps—performance does not improve, and the observed collapse still occurs at roughly the same point.”

This riff came after Apple supplied thinking LLMs with the needed algorithm to solve the puzzle presented; again, the result shows a weakness that needs to be solved for LLMs to continue to advance.

I can absolutely understand why LLMs critics feel vindicated by the Apple study. It does detail real limitations of current LLMs. The question for the market is whether or not Apple’s paper describes new problems that major foundation AI model companies have yet to uncover, and begin to work against. Or, if they are known issues that are — presumably — already being worked on.

The question of ‘can LLMs get us all the way to AGI,’ I think, is the wrong one; I would ask ‘will LLMs form an important plank of future AGI systems,’ and there I still think that the answer is yes.

What Apple and Marcus imply, however, is the need for new AI breakthroughs that are not merely iterative LLM upgrades. That means there are still trillion-dollar prizes to be won by someone. And I want to know if a startup or an incumbent will win them.

Sidebar: While it’s good to better understand LLMs' limitations, we also need to remember their strengths. Read this for more on the latter.

Chime’s IPO, Tesla’s robotaxi launch

Apart from a handful of earnings reports, there are two news items in the offing that hold CO’s anticipatory attention:

Tesla’s robotaxi business is supposed to launch this week. We don’t know precisely what form the service will take, but reporting indicates that June 12th is the kickoff date. The more cars put to work, the less human intervention is needed, and the cleaner its launch, the better the day will prove for Tesla shareholders.

Chime’s IPO is expected to price on Wednesday, and trade Thursday. Coming off the red-hot Circle debut — and follow-up note from Gemini that it is also looking to list — Chime may have picked a great moment to pull the trigger and go public.

The question of price remains. As of this morning, there’s no new S-1/A filing from Chime on offer. That means that its current $24 to $26 per-share IPO price range is still the most recent benchmark we have to consider its worth. As RenCap notes, at midpoint of that range, Chime would be worth around $10.5 billion.

The issue for Chime is that while it’s impressive to list as a decacorn, the company is also worth but a fraction of its peak ZIRP valuation of around $25 billion. I still anticipate Chime will try to list above its first range as it the interval feels conservative. The question is how much ground it can make up.

Why are we so fixated on price? Apart from the exit valuation of a startup being the critical scorekeeping figure for the venture game (along with time from investment to liquidity, of course), there’s always the risk of later investors building so much downside protection into their checks that those who put capital in earlier suffer dilution.

But that aside, getting so much equity out and trading in a single go is still a huge moment for a basket of venture investors. So, Christmas didn’t really come last year, but it may come this June.

Good luck to everyone playing!

💚